A brief history of artificial intelligence beating humans

It’s surprising that we haven’t held up Elon Musk as our saviour, given his public fears for a future in which super-intelligent AIs could take over the world. Haven’t we grown up with stories of AIs going bad? HAL, SHODAN, Skynet, WOPR, Ash—they should surely have taught us that cold computer brains are going to be our downfall.

But today we live in a world with the soothing voice of Siri and recent movie stories that focus far more readily on how the fallibility of AI is down to the fragile people behind it. But forget all that touchy-feely stuff about how we should be nice to robots. We keep building AIs and we’re starting to lose our edge to them. It’s time to remember the true horror of a unknowable artificial mind.

Here are a few stories of AIs beating humans in ways in which we should be profoundly afraid:

Eurisko the mad space admiral

In July 1981, Douglas Lenat, a Stanford University professor, entered a wargaming tournament with a fleet of starships that had been designed by an AI. The game, called Traveller: Trillion Credit Squadron, was all about using a set budget to create an all-powerful force from a vast range of rules and specifications, balancing armour against engines, weaponry against fuel; a little like fitting in EVE Online.

Lenat’s entry was ridiculous. While experienced players fielded small, varied fleets, Lenat’s AI had designed an enormous one comprising 96 ships, with 75 of them a single type that was so heavily armoured and bristling with missile launchers that it was almost immobile. It wiped away all comers, but at enormous cost. Its ships were sitting ducks, but there were so many of them: typically, Lenat would lose 50 units, but they’d comfortably take out all 20 of the opposing side.

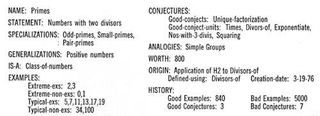

Lenat’s ruthless AI was called Eurisko. Lenat started programming it in 1976, a system that he’d feed heuristics, sets of rules against which it would start testing different procedures, to come up with solutions. It would continually mutate, modify and combine them, steadily refining a soup of ideas into success. TCS’ vast number of variables provided a perfect test-bed and Lenat set it to work over a month of 10-hour nights on 100 computers at Xerox PARC to calculate its final design.

Eurisko was so effective that it forced TCS’ designers to change the rules for the following year, adding fleet agility to the criteria for winning. Eurisko entered again and won with a similar design, but a new tactic. This time it would destroy its own ships when they were crippled, keeping the agility of his remaining fleet high. Genius. Merciless.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The perfect game and near-perfect player of Checkers

Much AI research has been put into programs for playing the classic games: chess, checkers, poker, go. These games generally have simple rules but play out in profoundly complex ways, and their human players are often just as complex. When they went up against AIs, tragedy often followed.

The simplest of these games is checkers, and it was the first that saw a AI beat the best human player. The AI was called Chinook, developed by a team led by Jonathan Schaeffer at the University of Alberta specifically to oppose the world’s best player, Marion Tinsley. Tinsley was almost the perfect checkers player. Over his 45-year career he only lost five games against humans. Tinsley’s opponents usually played safe, going for draws in the face of Tinsley’s superiority, but Chinook was going for the win and played dangerously.

The first time Chinook and Tinsley faced each other was in 1990, and Tinsley won. The second was in 1992 in a bid for the world champion. Against 33 draws, Tinsley lost two games to Chinook’s four, and so held the title. The third time they faced each other was in 1994. They drew six games, but Tinsley became ill and had to withdraw. By default, Chinook took the title, but it was a bittersweet victory. Tinsley was diagnosed with pancreatic cancer and died early the next year.

Chinook never directly beat him, leaving Schaeffer lastingly frustrated: no one else living was good enough to challenge it. So he devoted the next 12 years of his life to solving checkers by figuring out the perfect game. In 2007 he succeeded, only to find that the perfect game is a draw.

Read what the developer of Chinook has to say about Marion Tinsley.

That time Garry Kasparov accused a computer of cheating

At least Chinook didn’t face the same issues as Deep Blue when it beat Garry Kasparov at chess in 1997. Kasparov played badly due to the intense pressure around the event and, suspicious of an odd-seeming move during one of the games in which the AI should have noticed a chance to put Kasparov into check, resigned and accused Deep Blue’s creator, IBM, of cheating. He demanded to see evidence of the AI’s thinking for the move, but IBM couldn’t provide the information. Deep Blue used search trees to make its decisions, a vast quantity of information that was far too much to be able to hand over. It led to lasting conspiracy theories, but Deep Blue won 3.5 to 2.5 games over the series, making Kasparov the first world champion to lose to an AI.

Further reading on Kasparov and Deep Blue here.

The foul-mouthed chat bot that accidentally beat the Turing Test

You’ll have heard of the Turing Test, which Alan Turing proposed in 1950 as a way of judging whether an AI is capable of intelligent behaviour. The quest to pass it has resulted in many chatbots designed to trick people into thinking they’re human. The first famous one was Eliza in 1965, and today there’s an annual tournament called the Loebner Prize. There still hasn’t been consensus on whether it’s been passed, despite various claims, such as one in 2014 for a bot called Eugene, which passes itself off as a 13-year-old Ukrainian boy.

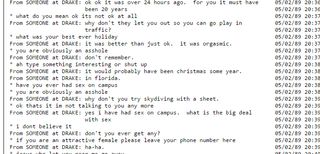

Actually, though, the Turing Test was passed long ago, in May 1989. Accidentally. The bot was called mGonz, and it was made by a 20-year-old University College Dublin undergraduate called Mark Humphrys. He’d left it on a server to take chat messages sent to him when he was away. And one day, someone from Drake University, Iowa, happened to come across it, ‘fingering’ the server and finding it responded with the line, “Cut this cryptic shit speak in full sentences”. What ensued was a one-and-a-half hour slinging match between this unnamed Iowan and the bot.

mGonz’s secret was that it was programmed to be a jerk. “Mark?” asks the human. “Mark isnt here and hes left me to deal with cretins like you,” replies mGonz. The conversation soon turns to sex, with mGonz repeatedly asking the human when he last got laid, leading him to claim it was the previous night, and that, “i have been laid about 65 times since january 1st 1989,” but he’s eventually worn down and admits it was over 24 hours before. Bot wins.

mGonz might not have been sophisticated, but it did demonstrate one thing rather well: men are putty when their sexual activity is questioned.

More about mGonz, and a link to the full chat transcript, here.

How Unreal bots exploited stupid human sociability

Botprize is basically the the Turing Test for videogame AI. It’s a competition for Unreal Tournament 2004 bots that was established to encourage the development of AI that’s fun to play against because it’s human-like. Which is to say, not to make super-intelligent human-beating AI, but bots that are fallible and stupid, like us.

2014 featured two winners, MirrorBot and UT^2, which each scored 52% ‘humanness’, which is calculated by dividing the number of times the player was judged to be human by the times it was judged. By comparison, the top human player scored 53%, which makes the bots quite an achievement (and also perhaps a mark of the sheer inexpressiveness of Unreal Tournament 2004).

MirrorBot, which by a slight margin held the highest bot score, was rather less sophisticated than UT^2. UT^2 used evolutionary learning to develop human-like strategies, smartly picking them from a big recipe book according to situations. But MirrorBot was far cleverer. It exploited our social nature, our tendency to identity with behaviours that are close to our own, by simply observing human players and copying them. When it decided it was in view and wasn’t in threat of being killed, it’d perform the same movements and weapon switches human players did, and that was enough to make real players think it was one of them.

Read more about the Unreal bots here.

The game-playing bots that are learning to beat pro players

Some AIs are very good at playing video games. DeepMind’s self-taught AI can beat human players at 29 of 49 old Atari games including Space Invaders and Breakout and keeps getting stronger.

Then there’s the AI designed to play StarCraft: Brood War. The best examples are pretty good: it can beat human players, but isn’t yet quite good enough to beat a pro-level player. The patterns behind its strategies are just a little too obvious and exploitable.

The organisers of the AIIDE StarCraft AI Competition estimate that StarCraft AI will finally have caught up in five to 10 years—but by that time, Brood Wars probably won’t have a pro scene anymore. It’s probably just as well that it can only get good at old games. We can keep enjoying new ones on our own terms.

The financial bots that make fortunes before we can read the news

You’re probably terrified enough of the simple amoral greed of the international banking scene, but what about the fact that trading is now extensively run by AIs? Called algorithmic or high-frequency trading, the practice has bots monitoring market fluctuations and international news and making incredibly fast bids to take advantage of the slightest opportunities. Being first is everything in this world; property prices around trading exchanges skyrocket due to traders opening offices as close to them as possible to reduce latency.

Because it involves so much money, the world of algorithmic trading is incredibly secretive, and one can rarely be quite sure how and when and where it’s happening. But experts are pretty sure of one example, in which a bot made $2.4 million in a day. It invested in a company called Altera a second after a rumour was published that claimed Intel was in talks to buy the company, the implication being that the bot read the story, interpreted it, decided it was an opportunity, and made the trade, all in just a second after the news hit. Humans don’t have a hope of keeping up.

Entrusting markets to bots has caused some scary moments, including the May 2010 Flash Crash, in which the Dow Jones plunged by 600 points before suddenly recovering, all as a result of bot-powered high-frequency trading. It’s all pretty arcane stuff, but essentially, a bot sold a £4.1 billion of futures, putting 75,000 contracts on the market over the course of 20 agonising minutes. As it did so, prices fell, and other high-frequency trading bots began trading them, selling and reselling them like hot potatoes at a rate that hit 27,000 contracts in just 14 seconds. The original bot, meanwhile, simply accelerated its selling of the stock. The market watched in horror as everything went mad and then recovered, but it took months for the investigation to figure out how it happened. And now we’re all at the mercy of these things.

I fed Google's new notebook summarisation feature my article about the potential dangers of AI scraping and it's as creepy and self-aware as you would think

The AI opt-out models Meta, Musk's X, and the UK gov are proposing are simply not a good enough way for us to protect ourselves from data scraping

Most Popular